Kodosumi

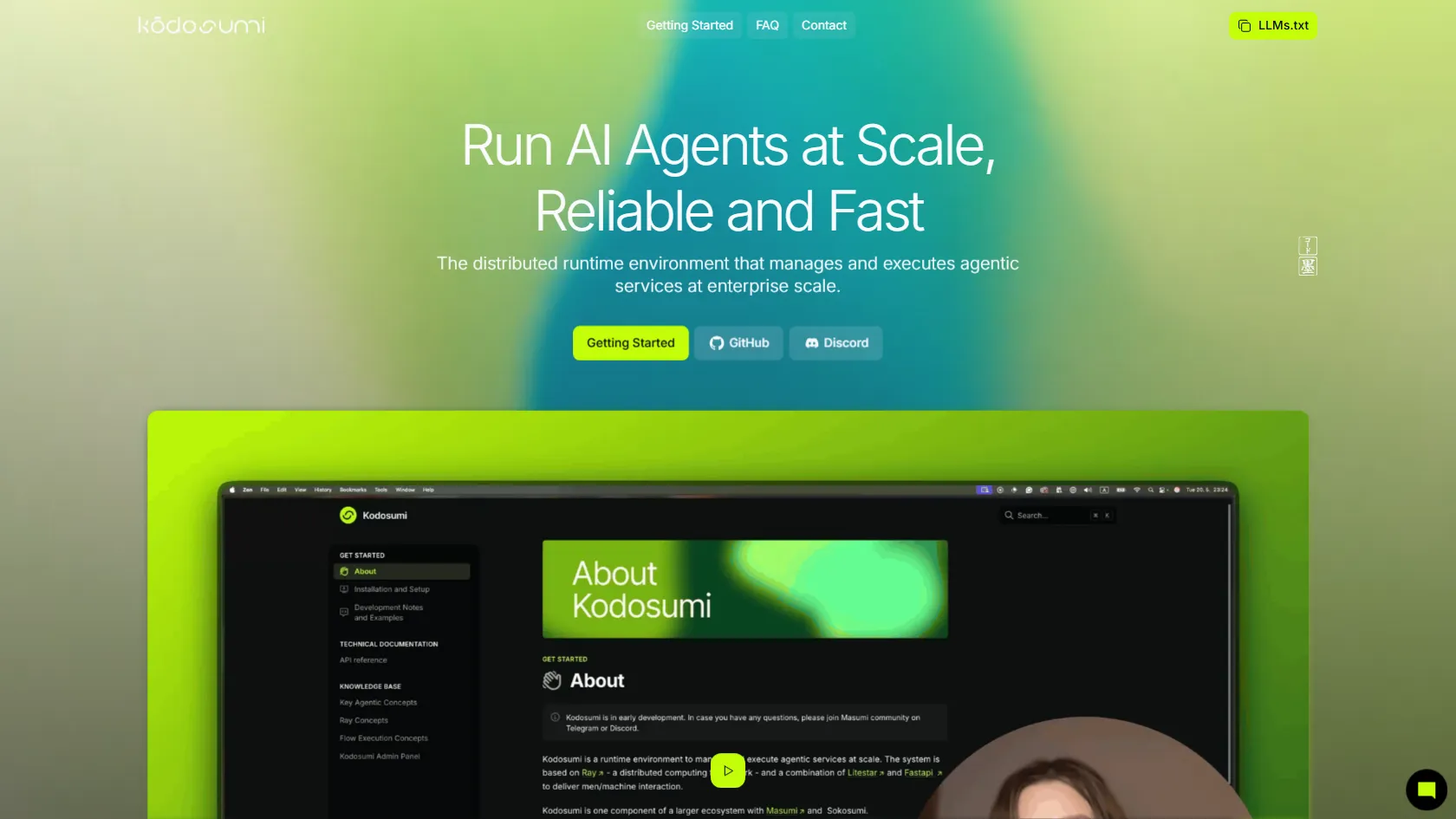

Open-source runtime for deploying and scaling AI agents.

Kodosumi is an open-source runtime environment designed for developers to deploy and scale AI agents efficiently. It supports fast, scalable, and free deployment of agentic services, leveraging Ray for distributed computing. Ideal for enterprise-scale applications, it offers minimal configuration overhead and framework agnosticism.

Free

How to use Kodosumi?

Kodosumi allows developers to deploy AI agents at scale with minimal setup. By using a single YAML config file, users can deploy agents locally or in the cloud, manage long-running workflows, and integrate with any LLMs or frameworks. It's built on Ray, ensuring reliability and scalability for complex agentic workflows.

Kodosumi 's Core Features

Kodosumi 's Use Cases

Kodosumi 's FAQ

Most impacted jobs

Software Developers

AI Researchers

Data Scientists

DevOps Engineers

Machine Learning Engineers

Technical Leads

Startup Founders

Enterprise Architects

Cloud Engineers

System Administrators

Kodosumi 's Tags

#AI Agents#Open Source#Scalability#Ray#Framework Agnostic#Real-time Monitoring#Distributed Computing